by: Mohammad Isaqzadeh, Princeton University

I arrived in Kabul, Afghanistan, on January 1st, 2020, to conduct two rounds of survey, studying the relationship between violence and religiosity. It was such a euphoric moment for me. I was finally able to conduct my dissertation fieldwork and test the theory I had spent three years developing. While the political science literature had focused on whether religion, particularly Islam, caused, or contributed to, the onset or escalation of armed conflict, I argued that there could be a reverse causal relationship between religion and armed conflicts. Exposure to violence could lead to death anxiety and a diminished sense of control, which in turn would reinforce religiosity as a psychological coping mechanism. To test this theory, I had chosen eight high-risk neighborhoods in Kabul that were likely to experience intensified insurgent attacks over the following months. These neighborhoods were high-risk because of their vicinity to important military installations or symbolic places of worship for the Shia religious minority, who were frequently targeted by insurgent groups. I also had selected another eight neighborhoods that were like the high-risk neighborhoods demographically but were less likely to experience intensified insurgent attacks. My plan was to conduct a baseline survey in the winter and a follow-up survey with the same respondents the following fall after the escalation of violence. The two rounds of survey would allow me to test whether and to what extent exposure to violence affects religious intensity among civilians.

Face-to-Face Survey Challenges

Prior to starting my Ph.D., I worked in Afghanistan for five years. During those years, I managed several large-scale and nationally representative surveys in Afghanistan. All those years gave me extensive experience and insights on the challenges and prospects of conducting surveys in fragile contexts. I had learned different measures that enumerators could adopt to make their work easier but undermined data quality and the integrity of a survey. To ensure that I was able to monitor the survey effectively and detect any issues that undermine data quality, I decided to use SurveyCTO, one of the platforms for electronic data collection. With SurveyCTO, data collection was done using smartphones or tablets, and the data could be uploaded to the server automatically as long as the device had an internet connection. In addition, the platform offered numerous measures for quality check.

For this survey, I asked enumerators to go to the target neighborhoods and use a random-walk procedure to select a prespecified number of households in each neighborhood. One challenge in conducting a survey based on random-walk procedure is making sure that enumerators visit the neighborhood and follow the procedure for selecting households. SurveyCTO allowed designing the questionnaire in a way that the device automatically captured the location of an interview multiple times during the interview. Furthermore, the platform allowed hidden location recording. Whenever the interview received a particular question, the platform recorded the location automatically, without requiring an interviewer to press any button. In addition, the platform recorded the number of seconds an interviewer spent on a question, before moving to the next one.

I hired and trained fifteen enumerators. With improvising all these measures, I was confident that we would collect high-quality data. I made it clear to the enumerators that I would monitor the survey very closely and will not tolerate issues that undermined data quality. Nonetheless, I had to dismiss one enumerator who, in multiple instances, submitted interview files that were too short. The interview log file showed that the enumerator had spent less than 6 seconds on the questions which on average would take more than 20 seconds to read only, let alone giving time to the respondent to answer the questions. Based on the data on interview locations, the other enumerators seemed to visit the neighborhoods they had been assigned, and to spend reasonable time in conducting interviews.

Unfortunately, we had conducted only 300 of the targeted 1500 interviews when covid-19 began to spread in Afghanistan. I was instructed on March 20, 2020 by my university, to stop the fieldwork immediately. It was such a heartbreaking experience. After months of preparation, I had to stop the survey and leave Afghanistan. I was not sure at the time when and how I would be able to return to the field and complete the survey.

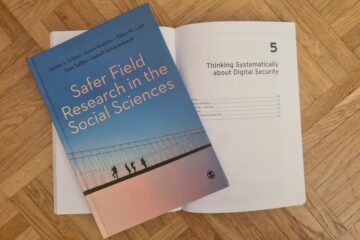

Mohammad Isaqzadeh training enumerators, Kabul, Afghanistan, February 15th, 2020.

Phone Survey: Challenges and Prospects

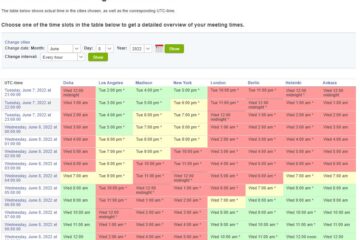

By June 2020, I realized that the Covid-19 pandemic might not end anytime soon and that face-to-face interviews might not be an option anymore. I explored the option of conducting interviews over the phone. First, I needed to obtain permission for a phone-based survey from the Princeton Institutional Review Board (IRB).1 I was able to obtain IRB approval to conduct the survey over the phone and to record the interviews for monitoring quality.

One challenge that I faced was obtaining the lists of phone numbers of residents. Luckily, the government had instructed neighborhood representatives to produce lists of poor residents in each neighborhood for distributing breads and pandemic-related relief. Since Kabul was under quarantine for three months, the Kabul municipality distributed breads through bakeries to needy households using the lists provided by neighborhood representatives. Before the start of the face-to-face survey, I had obtained permission and authorization letters for conducting the survey from more than five government agencies, including Kabul municipality. We used the letters from Kabul Municipality to request neighborhood representatives to share with us their lists. Overall, we collected around 5000 contact numbers from the neighborhoods I had planned to survey and randomly selected one third of them. Although those listed were poor compared to Kabul residents, they represented better the overall population of Afghanistan. Most Kabul residents worked either for the government or for local or international NGOs and usually earned much more than Afghans in the provinces. Those in my sample had an average household income of 10,000 Afs, which was comparable to the national household income.

We offered respondents around 2 USD worth of mobile top-up for their participation in the phone survey. Mobile top-up is credit that mobile owners could use for talk time since mobile plans were mainly pre-paid in Afghanistan. I used a local company for sending mobile top-up to the respondents who completed interviews. I deposited money to the company’s bank account, which was then turned into top-up credit in my online account. I used my online account to transfer remotely top-up credit to participants’ mobiles. The arrangement was very efficient and worked very well.

Phone surveys had some advantages and disadvantages compared to face-to-face interviews. In terms of advantages, it was logistically easier to conduct phone surveys once phone numbers were obtained. I relied on the same enumerators. They did not have to travel to different neighborhoods and were able to conduct interviews from the comfort of their homes. Conducting interviews over the phone reduced transportation costs and saved time. In addition, it reduced the enumerators’ risk of exposure to violence, which was a likelihood in Kabul. Second, it was easier to have high-quality recording of phone interviews for back check and assessing the quality of interviews.

More importantly, it was easier to reach out to respondents over the phone compared to face-to-face surveys. Fortunately, the rate of mobile ownership is pretty considerable in Afghanistan urban areas – there is at least one mobile device in every home. There were a handful of households who had listed their neighbors’ phone numbers since the household did not own a mobile device. Given the cultural sensitivities and gender segregation norms in Afghanistan, we had planned to interview male respondents for the face-to-face and phone surveys. Men rarely were home when enumerators visited households during working hours while it was not safe to visit neighborhoods when it got dark in Kabul. It was, however, easier to arrange a phone interview after working hours since the enumerators conducted interviews from their homes.

The main disadvantage of a phone survey was building rapport and gaining respondents’ trust. The rate of rejection and discontinuing interviews was higher for phone survey compared to face-to-face interviews. We had obtained approval letters from multiple government agencies for conducting the survey, including the Afghanistan Ministry of Health. When the enumerators introduced themselves, they mentioned that the survey had been approved by the Ministry of Health and Kabul Municipality and that copies of approval letters could be sent to respondents via WhatsApp. The approval letters helped in most cases, but some respondents could not trust talking to an unknown person on the other side of the line. The suspicion was understandable given that Afghanistan was experiencing war and insurgent groups were active in Kabul. Not surprisingly, the rate of participation was lower, and more phone interviews were ended incomplete, compared to face-to-face interviews. Nonetheless, it was a relatively successful exercise. More than 65 percent of the calls led to complete interviews, and the respondents welcomed being called for the second round of surveys. The response rate was satisfactory given that we had a few very sensitive questions – views about policies promoted by insurgent groups. Only among groups where insurgents enjoyed overall higher support these questions were answered reluctantly. Among other groups, respondents seemed to answer these questions without reserve.

Listening to the audio records of interviews taught me new lessons on issues related to survey quality. In the past, I had monitored and observed many face-to-face interviews for assessing enumerators’ skills, but those interviews did not always reflect how the enumerators conducted interviews in the field. Knowing that I was observing them, the enumerators always did their best performance. Listening randomly to recorded phone survey offered me more insights on issues that undermined the quality of data.

First, I had usually welcomed enumerators’ changing questions from formal language into a colloquial dialect that made the questions more comprehensible to respondent, particularly illiterate respondents. Listening to phone surveys I realized that when enumerators changed the question into colloquial language, they sometimes totally changed the meaning of questions. Second, the enumerators usually have the incentive to conduct interviews quickly and minimize the time that they need to spend on completing each interview. They may nudge a respondent to answer questions in a particular way, without giving the respondent enough time to understand the question, make up his/her mind, and articulate his/her answer. Enumerators may sometimes engage in such a practice to save time or sometimes may do so unintentionally. Since enumerators administer the same questionnaire multiple times, they may underestimate how much time a person who hears the question for the first time needs to understand a question and to articulate an answer. As a result, enumerators may not give enough time to a respondent to answer questions properly. The collected data, thus, may not reflect the respondent’s opinion. Although all questions in my surveys were closed, rather than open-ended, the enumerators sometimes rushed and did not give respondents enough time to think and answer questions thoughtfully.

To mitigate this issue, most electronic data collection platforms, including SurveyCTO, allow you to set a minimum time that an enumerator must spend on a question before moving to the next. More importantly, it is essential to have a robust back-check system in place for monitoring and assessing interview quality. Having audio records of interviews is key to assessing interviews and raising this issue with enumerators who may intentionally or unintentionally prompt answers.

Some Tips for Other Researchers

Phone surveys could be a good alternative to face-to-face surveys, particularly at times or in places where face-to-face interviews are unaffordable or not feasible. If you have phone numbers, researchers could conduct interviews with people regardless of how far they are from the enumerators. Here are few tips on how you could improve the integrity and quality of data collection through phone surveys.

- Consider providing reasonable incentives (such as top-up credit or monetary compensations) to participants. Talking over the phone for 20 or 30 minutes is much more cumbersome than talking face-to-face. Without incentives, the participation in phone surveys could be much lower than face-to-face surveys. Nonetheless, the compensation should not be too large to raise respondents’ suspicion or change their expectation in a way that could affect their responses.

- If the survey includes sensitive questions, move those questions to the end of the survey. As part of the consent process, respondents should be informed before the start of an interview that they could stop answering any question they do not feel comfortable answering and that they could discontinue an interview at any time. Moving sensitive questions to the end of the survey would help you obtain answers to non-sensitive questions in case a respondent decides to discontinue the interview when encountering sensitive questions.

- Backcheck is essential for assessing the quality of interviews conducted. A researcher should audio record the interviews and have another individual (monitor) listen to audio files and re-enter the responses to the same questionnaire. There are packages in R (and perhaps also in Stata and other statistical programs) to easily check the discrepancy of the data entered by an enumerator vs. by a monitor.2 Since the backcheck process shows only the discrepancy between the data entered by two individuals but does not detect which one is more accurate, you would need to train the monitors well and have confidence in them. Alternatively, you could have a third person listen to the interview to assess whether the discrepancy is because of inaccurate data entered by an enumerator or a monitor.

- Partial audio recording: if the surveys are very long, the audio files may end up being too large to upload to the server for electronic data collection. SurveyCTO allows you to decide whether the entire survey would be audio recorded, partially or random sections. To save device memory and also the amount of efforts needed for re-entering an interview data, you could decide to audio record surveys partially, such as only the sensitive or challenging questions.

- Our research findings heavily depend on, and are as good as, the quality of interviews conducted. Enumerators’ interests are often diagonal to those of the researcher. On the one hand, a researcher’s findings are valuable only if they reflect the respondents’ views, which can be obtained only if a respondent understands the survey questions and is able and willing to express his/her opinion. Enumerators, on the other hand, tend, and have the incentive, to save their time and conduct an interview as quickly as possible. To align enumerators’ interests with those of the researcher, one needs to change the enumerators’ incentives by rewarding them for interviews conducted more carefully and with care and set a minimum time needed to conduct an interview properly. Pay more for interviews with least errors or reward top-performing enumerators.